AI for Hate Speech Detection

New AI Method for Hate Speech Detection and Counterspeech

Researchers at the St. Pölten University of Applied Sciences have developed a novel method for detecting and classifying hate speech and counterspeech, setting new standards in transparency and efficiency. The approach is presented in the recent high-impact publication ''Distilling knowledge from large language models: A concept bottleneck model for hate and counter speech recognition'' (https://doi.org/10.1016/j.ipm.2025.104309), introducing a groundbreaking approach to text analysis that goes beyond conventional methods.

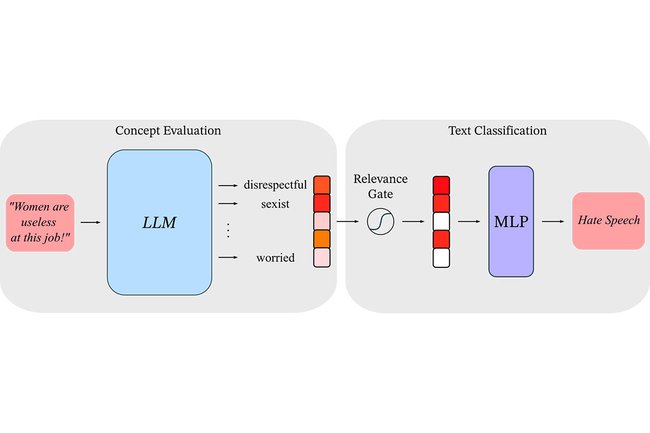

The published method, Speech Concept Bottleneck Model (SCBM), combines modern Large Language Models (LLMs) with concepts understandable for humans, developed in collaboration with sociologists from the University of Vienna. These concepts, formulated as adjectives (e.g., sexist, provocative, caring), capture the intent and tone of messages and social media posts. LLMs evaluate how closely these concepts apply to the content of each message. Based on these assessments, lightweight and transparent machine learning models are trained, enabling the classification of hate speech and counterspeech in a manner that is accurate, interpretable, and resource efficient. The method is fast, efficient, and suitable for scalable real-time applications.

This research represents an important advance in the fight against online hate speech, providing a powerful tool for detecting harmful content and promoting constructive counterspeech.

Copyright: FH St. Pölten

The following authors contributed to the publication:

- Roberto Labadie-Tamayo (St. Pölten UAS)

- Djordje Slijepčević (St. Pölten UAS)

- Xihui Chen (St. Pölten UAS)

- Adrian Jaques Böck (St. Pölten UAS)

- Andreas Babic (St. Pölten UAS)

- Liz Freimann (Universität Wien)

- Christiane Atzmüller (University of Vienna)

- Matthias Zeppelzauer (St. Pölten UAS)